Based on the experience of their founder, an ex-intelligence officer in the British Army, our client saw the need for software that would allow a trained operator to quickly and easily gather, filter, and analyze real-time intelligence around specific geographies and keywords in order to support critical operations. They contracted Vaporware to design, prototype and build the platform that would ultimately be called Vendetta.

Plan

We began by listening. The founder had experience as an “operator” in the British Army, often tasked with manual intelligence gathering from social media and a wide range of internet sources to support ongoing missions. He knew there was a more efficient method of doing this with software, but he needed a fresh perspective to lay the groundwork.

After identifying and understanding the core need, we led a series of creative exercises in order to generate several possible solutions that could streamline the operator’s task of acquiring and filtering information. Next, we converged on a final direction forward, deciding as a team what success looked like and what assumptions we had to test to see if the platform was going to be successful. This helped uncover the operator’s critical path: gathering, sorting, and analyzing large volumes of information from start to finish.

Using the critical path we developed a prototype storyboard and an assumptions table to guide future user testing. Additionally, we defined metrics that set shared expectations for the next steps of development. Following the Plan session we delivered a custom Blueprint document, clearly articulating all findings and research, that would serve as a clean reference to future design and development.

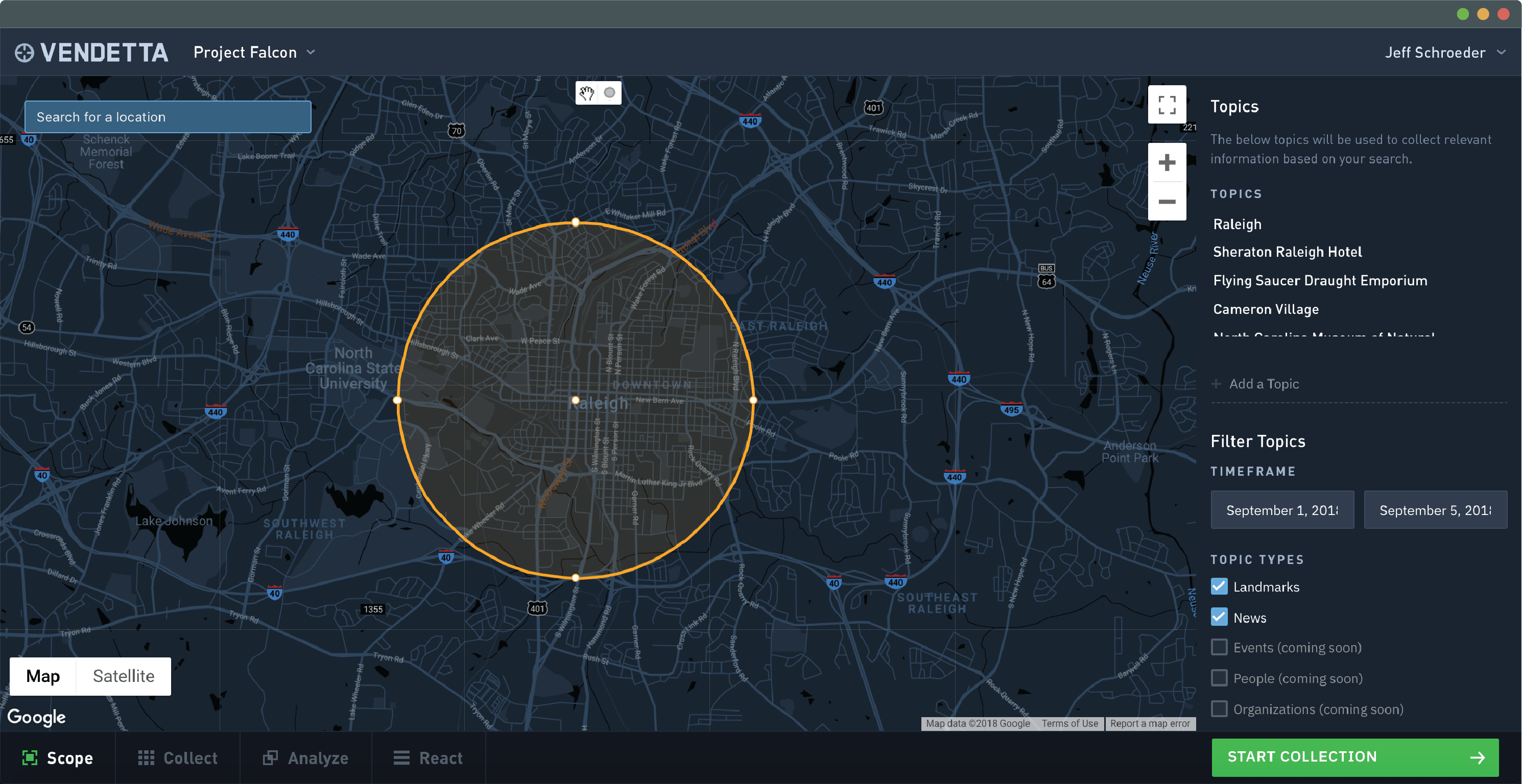

An operator defines the scope of the mission geographically, with a time frame, and by specifying relevant topics or keywords.

Prototype

Using the results of the Plan session, we created a prototype for the Vendetta platform in Invision. This allowed all team members to review the most up to date designs as well as allow them to provide immediate feedback to our designers. Through several iterations, the team arrived at an initial prototype that effectively sourced data into a powerful, but comprehensible report.

Given the potential scaling challenges of the design, we determined a code prototype should be the next phase before undertaking the full product development. The goal of the code prototype was to examine the capabilities of various API’s and technologies that would be required to implement the design of the Vendetta platform. With Twitter being a cornerstone integration, we focused initial development efforts on the the various tiers of the Twitter API and whether Rails could provide the necessary throughput for a backend system.

In addition to Twitter data, Vendetta needed to make use of a wide range of supporting services including: Maps, Image Recognition, Translation, and News and Topic services. We tested cloud services from Google and Amazon (AWS) for Image Recognition and Translation, as well as Topics and News services from Google and Datamuse.

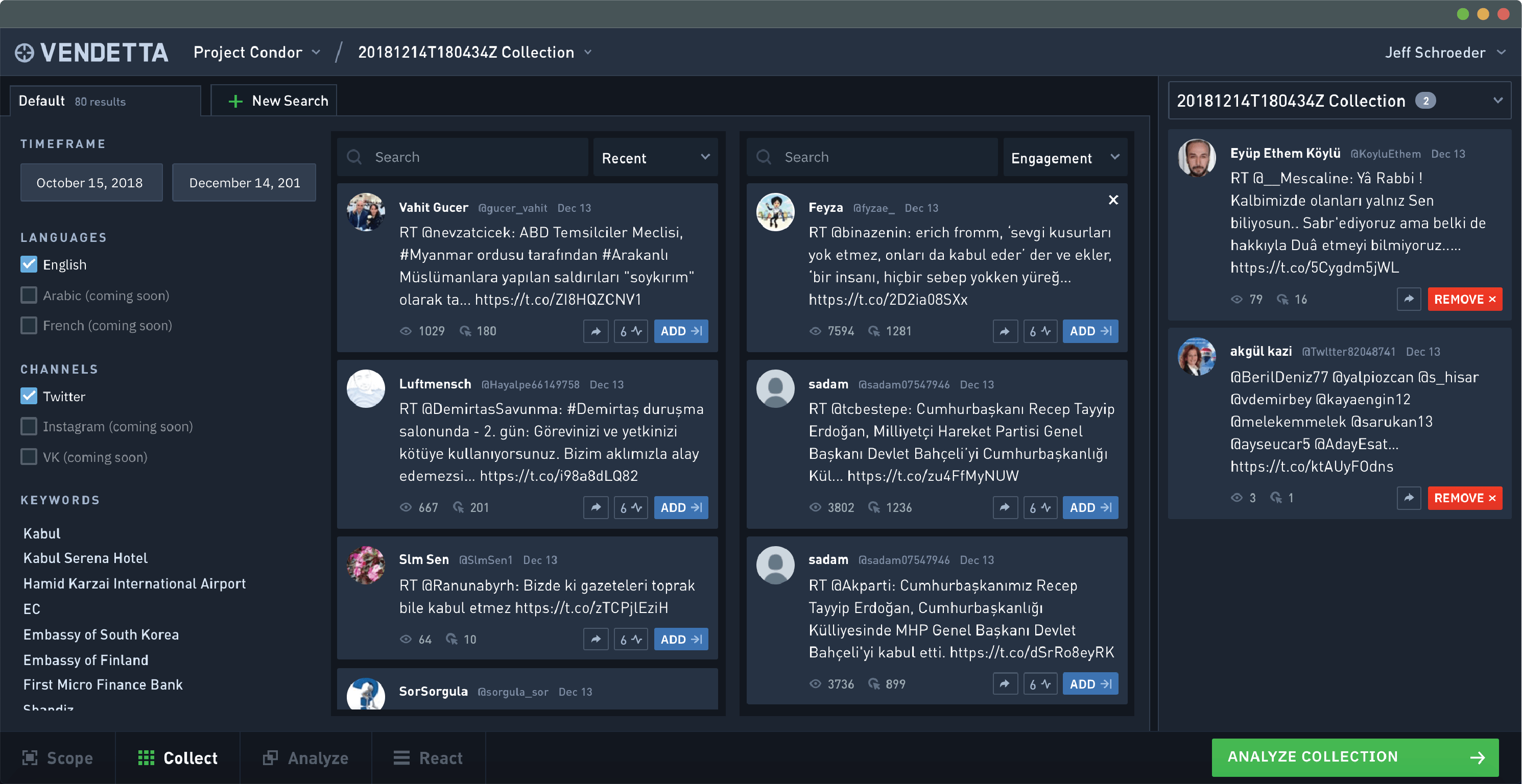

Based on the mission scope, the system gathers relevant info - in this case Tweets. The operator can then add selected Tweets to a Collection.

Produce

Over a six week period we developed the MVP as two separate code bases - the API written in Ruby on Rails and the web frontend written in React JS. While Rails allowed us to quickly develop a robust and extensible engine that would be able to support future growth and features of the platform, React JS was the best choice to implement the modern, dynamic design of the interface. The client was provided with a staging URL so they could monitor the development progress on an daily basis.

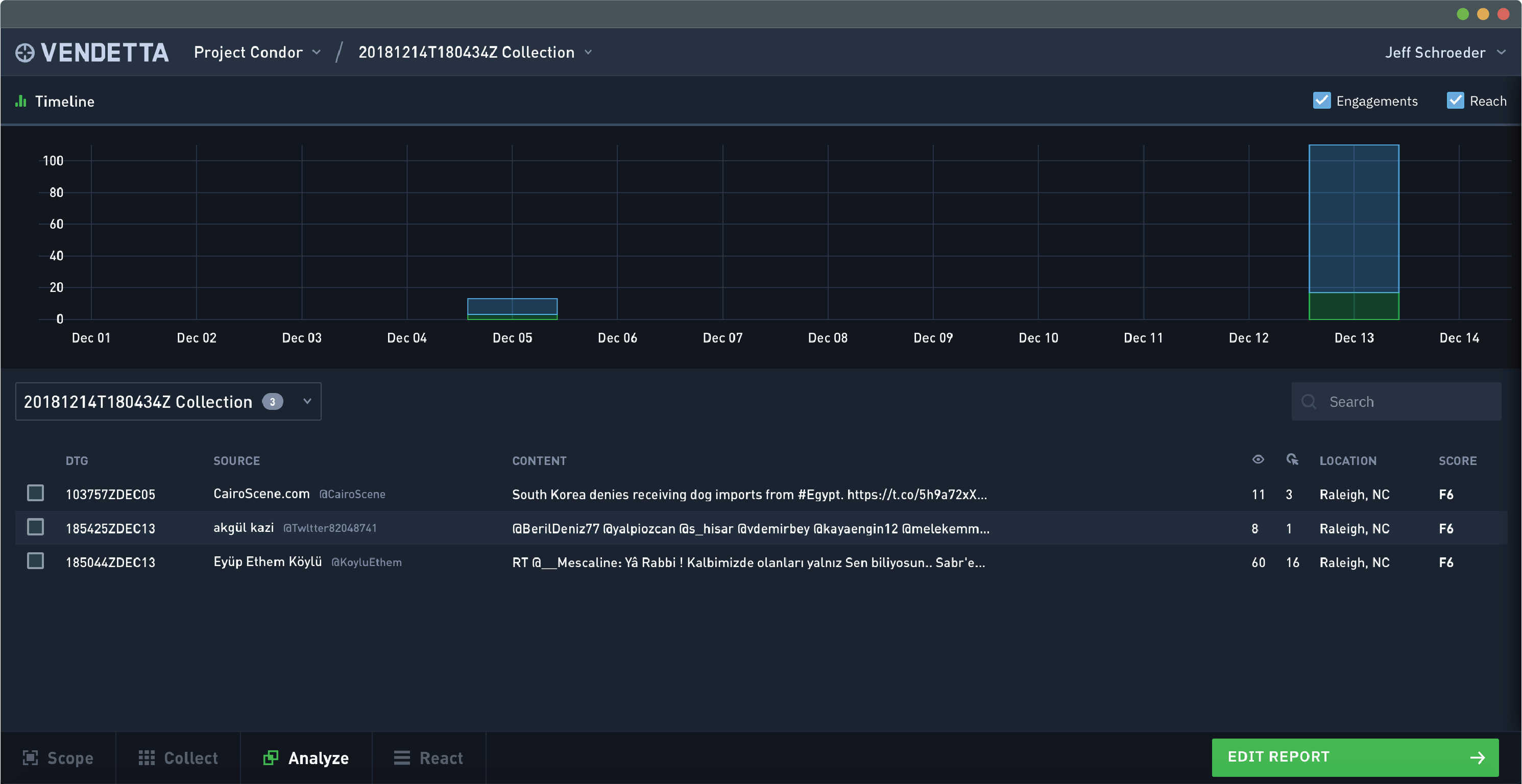

After collecting relevant data, the operator can then analyze the data based on timeline, geographic, or source data relationships.

Results

At the end of the six week development sprint the client had a fully functional MVP that was ready to demonstrate and test with potential clients. While Twitter was the inaugural data source, the application was built so that additional data sources could be plugged in with minimal additional development work. Furthermore, the application was designed and built to handle scaling issues as the platform grew.

Core App Platform & API

Ruby on RailsFrontend Javascript

React JSAuthentication

JSON Web TokensInitial Data Source

Twitter API

Drawing Geo Boundaries

Google Maps APIImage Recognition

Google Cloud Vision API

Translation

Google Cloud Translation API

API Specifications

SwaggerSource and Reliability Ranking

Custom Intellectual Property